To safeguard customers’ privacy, Apple is expanding its technology this year to enable the discovery and reporting of known child sexual abuse content to law police. “CSAM detection” is one of several new capabilities that Apple claims would help safeguard youngsters using its services from internet dangers.

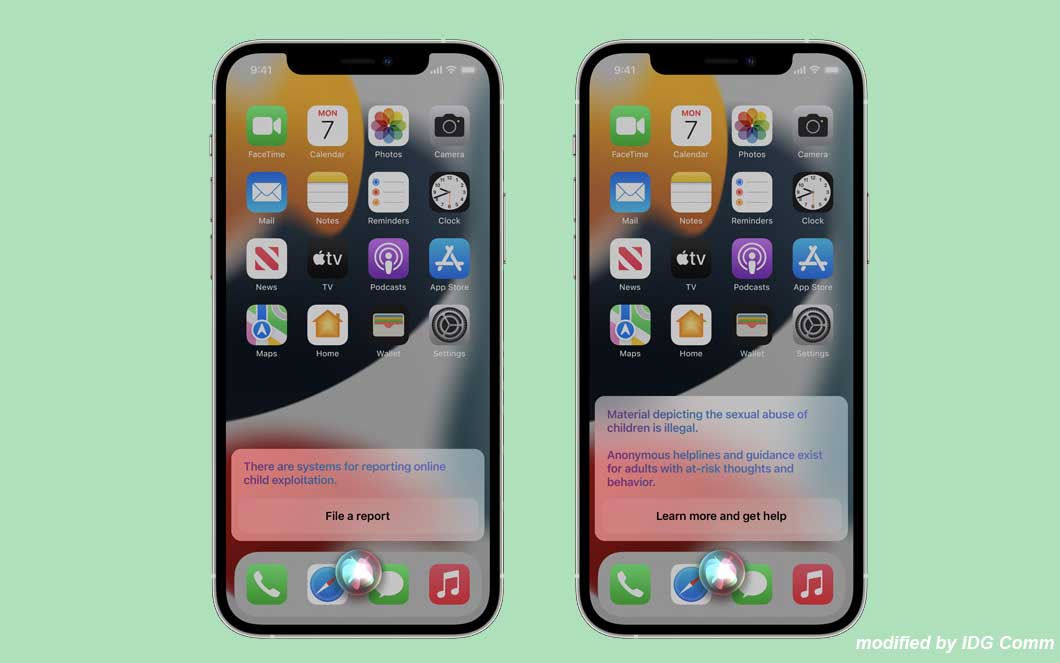

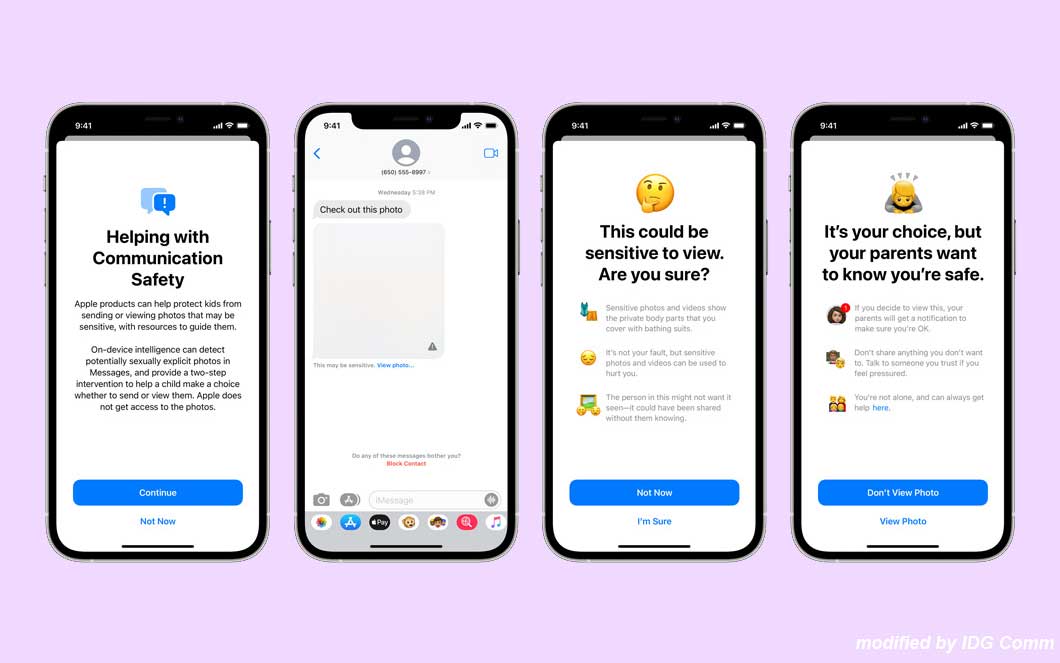

Including potentially sexually explicit photos transmitted through a child is said to include a filter that blocks the iMessage account. CSAM-related terms cannot be searched for using Siri or Search.

Users’ files are scanned by most cloud services (Dropbox, Google, Microsoft, etc.) for potential violations of terms of service and illegal content such as CSAM. But Apple has long resisted recovering customers’ files from the cloud by letting them encrypt their data before it gets to Apple’s servers. iPhone SE Plus leaks and full overview

According to Apple, instead of NeuralHash, a new CSAM detection technology. The image is not decrypted on the user’s device until an operational threshold is reached, clearing a series of checks. Users are flagged when they submit a photograph of child abuse that has been previously identified as having been uploaded to Apple’s iCloud.

In a series of Twitter posts, Johns Hopkins University cryptography expert Matthew Green disclosed that Apple was working on the new technology. Matthew Green, a cryptography professor at Johns Hopkins University, announced the existence of the new technology in a series of tweets on Wednesday. Some security professionals and privacy activists reacted negatively to the revelation. As well as users familiar with Apple’s approach to security and privacy that most other businesses lack.

To alleviate worries, Apple has built many levels of encryption that require multiple procedures before they can be reviewed by Apple’s final human reviewers. NeuralHash will ship with iOS15 and macOSMonterey next month or two. If your iPhone or Mac is equipped with a hash function, you may use it to turn any photo into an encrypted string of letters and numbers. Every time you change the image a little, the hash changes to prevent a match. Apple says NeuralHash cuts or is the same as an edited image and tries to make visually similar images generate the same hash.

Using this hash, the device will be checked against the well-known database of child abuse images before the image is posted to iCloud Photos. Which is provided by the medium-sized child protection organizations that are missing inappropriate children, and used (NCMEC) and others. Neuralhash uses an encryption technique called a separate intersection to detect a hash match without presenting the images or become user alerts.

Results are uploaded in Apple, but can’t be read. Apple uses another encoding principle called Secret Threshold Shared. Which can decode content when the user passes the threshold of known child abuse in your iCloud photo. One of these photographs must contain 10 shots of child abuse content in order to trigger Apple’s action, according to the company. MacBook Air M1, 2020 Review, Everything You Need to Know

At this time, Apple can decode suitable images, manually check the content, disable user accounts and report images for NCMEC and then go through the law enforcement. Apple said the process is the privacy of privacy. Rather than scanning files in the cloud in the form of neural has, just in search of the abuse of children known and not new. Apple said that there was a false positive opportunity, but there was a vocation locally if an account has been marked faulty.

Apple has published the specifications on the website, such as the Ouralhash operation viewed by encryption experts and highly estimated child protection organizations.

However, despite the support of the efforts to combat child-minded sexual abuse. There is still a supervisor component that many people feel unwell. Which are handed over to an algorithm, and some expert security calls more public discussions before Apple’s technology for users Starts.

Why now and not sooner is a huge question. As of yet, the CSAM has not been able to find Apple’s privacy policies. But firms like Apple have also had to deal with substantial pressure from the US government and its supporters. The codes doors are used to protect their user data to enable law enforcement to investigate heavy crimes.

Giants Tech refused efforts to return to their system but had the resistance to efforts to keep the state activities. Apple can’t even access the data saved in iCloud since it’s so walled down. Reuters stated last year that Apple has reduced a plan to encrypt the entire telephone backup of the telephone users after the FBI is turned on

News about the new CSAM recognition tool of Apple, no public discussions. Also causes concern that this technology can be abused for flood victims with children’s abuse images that can lead to their marked and closed account. But Apple lowered the concerns and said a crafting rating would consider evidence possible for abused use.

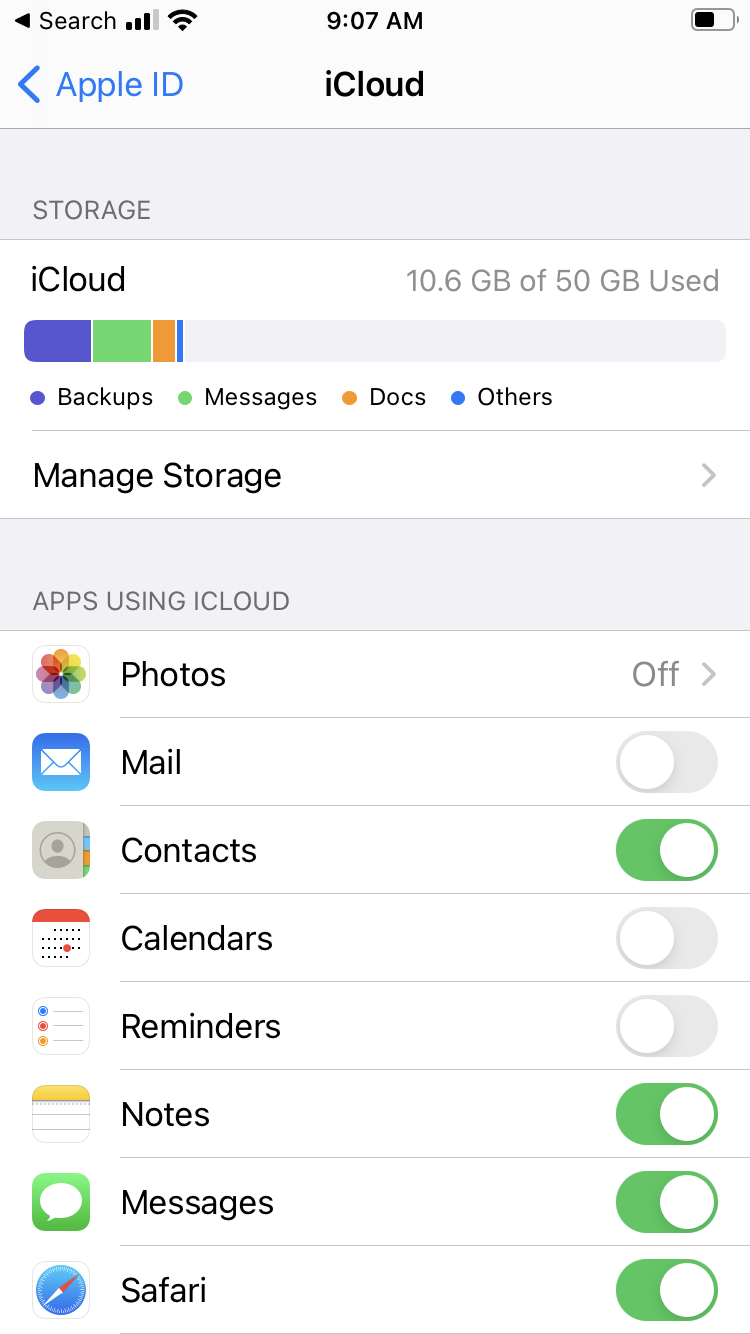

Apple said, Neuralhash will start in American start in the US first, but I do not say if or when it will be used internationally. After being mistakenly outlawed, businesses like Facebook were obliged to delete their child malfunctions throughout the European Union. Apple said this feature is the technical option in which you do not need to use iCloud photos, but it’s a request when the user is done Apple’s cloud does not belong to you.

iPhone Photos for Child Abuse Content Stop

Maybe you can’t. It integrates with the iPhone ecosystem. But If you Stop ICloud Photo upload then You can prevent that Use this setting: